Average Weight Information Gain Untuk Menangani Data Berdimensi Tinggi Menggunakan Algoritma C4.5

DOI:

https://doi.org/10.24002/jbi.v8i3.1315Abstract

Abstract.

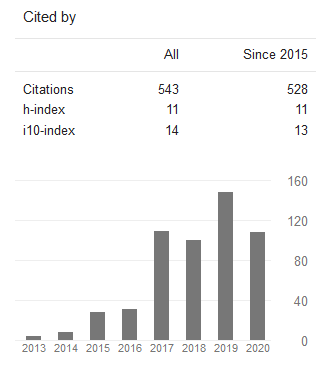

In the recent decades, a large data are stored by companies and organizations. In terms of use, big data will be useless if not processed into information according to the usability. The method used to process data into information is called data mining. The problem in data mining especially classification is data with a number of attributes that many and each attribute are irrelevant. This study proposes attribute weighting method using weight information gain method, then the attribute weights calculates the average value. Having calculated the average value of the attribute selection, the selected attributes are those with a value weights above average value. Attributes are selected then performed using an algorithm C4.5 classification, this method is named Average Weight Information Gain C4.5 (AWEIG-C4.5). The results show that AWEIG-C4.5 method is better than C4.5 method with the accuracy of the average value of each is 0.906 and 0.898.

Keywords: data mining, high dimensional data, weight information gain, C4.5 algorithm

Abstrak. Dalam beberapa dekade terakhir, data yang besar disimpan oleh perusahaan dan organisasi. Dari segi penggunaan, data besar tersebut akan menjadi tidak berguna jika tidak diolah menjadi informasi yang sesuai dengan kegunaan. Metode yang digunakan untuk mengolah data menjadi informasi adalah data mining. Masalah dalam data mining khususnya klasifikasi adalah data dengan jumlah atribut yang banyak atau dalam bahasa komputer disebut data berdimensi tinggi. Pada penelitian ini diusulkan metode pembobotan atribut menggunakan metode weight information gain, kemudian bobot atribut tersebut dihitung nilai rata-rata. Setelah dihitung nilai rata-rata dilakukan pemilihan atribut, atribut yang dipilih adalah atribut dengan nilai bobot di atas nilai rata-rata. Atribut yang terpilih kemudian dilakukan klasifikasi menggunakan algoritma C4.5, metode ini diberi nama Average Weight Information Gain C4.5 (AWEIG-C4.5). Hasil penelitian menunjukkan metode AWEIG-C4.5 lebih baik daripada metode C4.5 dengan nilai rata-rata akurasi masing-masing adalah 0,906 dan 0,898. Dari uji paired t-Test terdapat perbedaan signifikan antara metode AWEIG C4.5 dengan metode C4.5.

Kata Kunci: data mining, data berdimensi tinggi, weight information gain, algoritma C4.5

References

Bennasar, M., Hicks, Y., & Setchi, R. (2015). Feature selection using Joint Mutual Information Maximisation. Expert Systems with Applications, 42(22), 8520–8532. http://doi.org/10.1016/j.eswa.2015.07.007

Bolón-Canedo, V., Porto-Díaz, I., Sánchez-Maroño, N., & Alonso-Betanzos, A. (2014). A framework for cost-based feature selection. Pattern Recognition, 47(7), 2481–2489. http://doi.org/10.1016/j.patcog.2014.01.008

Chen, G., & Chen, J. (2015). A novel wrapper method for feature selection and its applications. Neurocomputing, 159(1), 219–226. http://doi.org/10.1016/j.neucom.2015.01.070

Chen, K. H., Wang, K. J., Wang, K. M., & Angelia, M. A. (2014). Applying particle swarm optimization-based decision tree classifier for cancer classification on gene expression data. Applied Soft Computing Journal, 24, 773–780. http://doi.org/10.1016/j.asoc.2014.08.032

Chou, J. S., & Wu, C. C. (2013). Estimating software project effort for manufacturing firms. Computers in Industry, 64(6), 732–740. http://doi.org/10.1016/j.compind.2013.04.002

Cil, I. (2012). Consumption universes based supermarket layout through association rule mining and multidimensional scaling. Expert Systems with Applications, 39(10), 8611–8625. http://doi.org/10.1016/j.eswa.2012.01.192

Daliri, M. R. (2013). Chi-square distance kernel of the gaits for the diagnosis of Parkinson’s disease. Biomedical Signal Processing and Control, 8(1), 66–70. http://doi.org/10.1016/j.bspc.2012.04.007

Dawson, C. W. (2011). Projects in Computing and Information Systems. Information Systems Journal (Vol. 2). Retrieved from http://www.sentimentaltoday.net/National_Academy_Press/0321263553.Addison.Wesley.Publishing.Company.Projects.in.Computing.and.Information.Systems.A.Students.Guide.Jun.2005.pdf

Farquad, M. A. H., Ravi, V., & Raju, S. B. (2014). Churn prediction using comprehensible support vector machine: An analytical CRM application. Applied Soft Computing Journal, 19, 31–40. http://doi.org/10.1016/j.asoc.2014.01.031

Fdez-Glez, J., Ruano-Ordas, D., Ramon Mendez, J., Fdez-Riverola, F., Laza, R., & Pavon, R.(2015). A dynamic model for integrating simple web spam classification techniques. Expert Systems With Applications, 42(21), 7969–7978. http://doi.org/10.1016/j.eswa.2015.06.043

Gorunescu, F. (2011). Data mining: concepts and techniques. Chemistry & http://doi.org/10.1007/978-3-642-19721-5

Hajek, P., & Michalak, K. (2013). Feature selection in corporate credit rating prediction. Knowledge-Based Systems, 51, 72–84. http://doi.org/10.1016/j.knosys.2013.07.008

Ispandi, I., & Wahono, R. (2015). Penerapan Algoritma Genetika untuk Optimasi Parameter pada Support Vector Machine untuk Meningkatkan Prediksi Pemasaran Langsung.

Suntoro, Average Weight Information Gain Untuk Menangani Data Berdimensi Tinggi Menggunakan Algoritma C4.5 139 Journal of Intelligent Systems, 1(2), 115–119. Retrieved from http://journal.ilmukomputer.org/index.php/jis/article/view/53

Jiawei Han, Micheline Kamber, J. P. (2012). Data Mining Concepts and Techniques Third Edition. Elsevier and Morgan Kaufmann (Vol. 1). http://doi.org/10.1017/CBO9781107415324.004

Jin, C., Jin, S. W., & Qin, L. N. (2012). Attribute selection method based on a hybrid BPNN and PSO algorithms. Applied Soft Computing Journal, 12(8), 2147–2155. http://doi.org/10.1016/j.asoc.2012.03.015

Koprinska, I., Rana, M., & Agelidis, V. G. (2015). Correlation and instance based feature selection for electricity load forecasting. Knowledge-Based Systems, 82, 29–40. http://doi.org/10.1016/j.knosys.2015.02.017

Korfiatis, V. C., Asvestas, P. A., Delibasis, K. K., & Matsopoulos, G. K. (2013). A classification system based on a new wrapper feature selection algorithm for the diagnosis of primary and secondary polycythemia. Computers in Biology and Medicine, 43(12), 2118–2126. http://doi.org/10.1016/j.compbiomed.2013.09.016

Larose, D. T. (2005). Discovering knowledge in data. Journal of Chemical Information and Modeling (Vol. 53). A John Willey & Sons, Inc., Publication. http://doi.org/10.1017/CBO9781107415324.004

Moustra, M., Avraamides, M., & Christodoulou, C. (2011). Artificial neural networks for earthquake prediction using time series magnitude data or Seismic Electric Signals. Expert Systems with Applications, 38(12), 15032–15039. http://doi.org/10.1016/j.eswa.2011.05.043

Oliver, A., Torrent, A., Lladó, X., Tortajada, M., Tortajada, L., Sentís, M., … Zwiggelaar, R. (2012). Automatic microcalcification and cluster detection for digital and digitised mammograms. Knowledge-Based Systems, 28, 68–75. http://doi.org/10.1016/j.knosys.2011.11.021

Qian, W., & Shu, W. (2015). Mutual information criterion for feature selection from incomplete data. Neurocomputing, 168, 210–220. http://doi.org/10.1016/j.neucom.2015.05.105

Sáez, J. A., Derrac, J., Luengo, J., & Herrera, F. (2014). Statistical computation of feature weighting schemes through data estimation for nearest neighbor classifiers. Pattern Recognition, 47(12), 3941–3948. http://doi.org/10.1016/j.patcog.2014.06.012

Sebastiani, F. (2002). Machine learning in automated text categorization. ACM Computing Surveys, 34(1), 1–47. http://doi.org/10.1145/505282.505283

Sun, X., Liu, Y., Xu, M., Chen, H., Han, J., & Wang, K. (2013). Feature selection using dynamic weights for classification. Knowledge-Based Systems, 37, 541–549. http://doi.org/10.1016/j.knosys.2012.10.001

Thammasiri, D., Delen, D., Meesad, P., & Kasap, N. (2014). A critical assessment of imbalanced class distribution problem: The case of predicting freshmen student attrition. Expert Systems with Applications, 41(2), 321–330. http://doi.org/10.1016/j.eswa.2013.07.046

Verónica Bolón-Canedo, Noelia Sánchez-Maroño, A. A.-B. (2015). Feature selection for highdimensional industrial data. Springer International Publishing Switzerland 2015. Springer International Publishing Switzerland 2015. http://doi.org/10.1007/s13748-015-0080-y

Wahono, R. S., Suryana, N., & Ahmad, S. (2014). Metaheuristic Optimization based Feature Selection for Software Defect Prediction. Journal of Software Engineering, 9(5), 1324– 1333. http://doi.org/10.4304/jsw.9.5.1324-1333

Wang, C. M., & Huang, Y. F. (2009). Evolutionary-based feature selection approaches with new criteria for data mining: A case study of credit approval data. Expert Systems with Applications, 36(3 PART 2), 5900–5908. http://doi.org/10.1016/j.eswa.2008.07.026

Wu, X., Kumar, V., Ross, Q. J., Ghosh, J., Yang, Q., Motoda, H., … Steinberg, D. (2008). Top 10 algorithms in data mining. Knowledge and Information Systems (Vol. 14). http://doi.org/10.1007/s10115-007-0114-2

Zhao, M., Fu, C., Ji, L., Tang, K., & Zhou, M. (2011). Feature selection and parameter optimization for support vector machines: A new approach based on genetic algorithm with feature chromosomes. Expert Systems with Applications, 38(5), 5197–5204. http://doi.org/10.1016/j.eswa.2010.10.041

Downloads

Published

Issue

Section

License

Copyright of this journal is assigned to Jurnal Buana Informatika as the journal publisher by the knowledge of author, whilst the moral right of the publication belongs to author. Every printed and electronic publications are open access for educational purposes, research, and library. The editorial board is not responsible for copyright violation to the other than them aims mentioned before. The reproduction of any part of this journal (printed or online) will be allowed only with a written permission from Jurnal Buana Informatika.

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.